IBM Flash System 7200

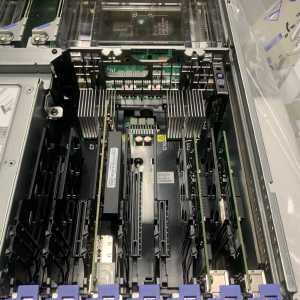

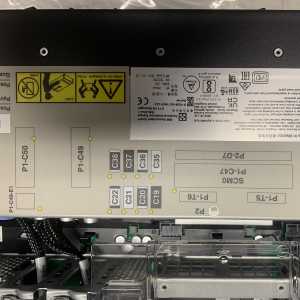

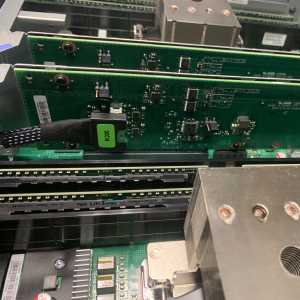

The IBM Flash System 7200 Control Enclosure has two clustered, hot-swappable node canisters that contain hot-swappable fan modules, memory DIMMs, batteries, and PCIe adapters. The enclosure also houses two AC power supplies that are redundant and hot-swappable. Concurrent code load enables applications to remain online during firmware upgrades to all components, including the flash drives.

Rebuild Areas A rebuild area is equivalent capacity to a single drive. The more rebuild areas you have the more drives that can fail one after another. Once a drive has been replaced, the data then gets copied back from all the spare spaces to the replaced drive, so this is another case of writing to a single drive which can take some time. The copy back time needs to be taken into account, replacing a drive doesn’t immediately give you back the redundancy.

At the moment, Distributed RAID on Storwize cannot make use of “global spares”. The DRAID itself has a spare (rebuild area), no need to assign a dedicated SSD as a spare, instead add all the SSDs into the pool.

Disk Expansion DRAID Expansion allows us to dynamically add one or more drives and increase the drive count of an existing in use DRAID array. The expansion process is dynamic, and non-disruptive. New drives are integrated and data is re-striped to maintain the algorithm placement of strips across the existing and new components.• All pools and volumes remain online and available during the expansion.

- Between 1 and 12 drives can be added in a single operation or task.

- Only one expansion task can be in progress on a given DRAID array at any point in time.

- Only one expansion is permitted per storage pool at any point in time.

- New drives can be used to increase the number of spare areas.

- Only add drives that match the same capability as those already in the DRAID array.

- Only the component count, or number of spare areas can be increased.

- Expansion does not allow you to change the stripe width or geometry.

During the expansion, the idea is to minimize the impact to the end user application. The expansion is a background task that will take many hours to complete. The exact time will be dictated by how busy the DRAID array is during the expansion, the performance and capacity of the drives.

As the expansion will take some time to complete, the new free space is drip fed into the pool. Therefore, during the expansion, you will see the pool capacity gradually increase until the expansion is completed.

What happens if a drive fails during expansion?

The rebuild takes priority. Wherever the expansion has got to, it pauses, begins the distributed rebuild of the missing strips onto one of the spare areas and continues until the rebuild completes. Only when the rebuild is finished, the DRAID array is online (not degraded) will the expansion process resume.

Note that the expansion does take priority over the build-back. Build-back is the final stage that a DRAID array requires when you replace the failed drive, where is copies the data back from the distributed spare areas onto the replacement drive to re-establish the original algorithmic layout.

One at a time, or all at once? The process of expanding the RAID array requires all existing data in the array to be moved around so that it is striped across the new set of drives. The amount of work required to complete the expansion is the basically the same whether you add one drive or 12 drives. If you have multiple drives to add to an array – add them in batches. It will take much less time to do it this way than to do it one at a time.

-

IMG_7808

IMG_7808

-

IMG_7809

IMG_7809

-

IMG_7810

IMG_7810

-

IMG_7811

IMG_7811

-

IMG_7812

IMG_7812

-

IMG_7813

IMG_7813

-

IMG_7814

IMG_7814

-

IMG_7815

IMG_7815

-

IMG_7816

IMG_7816

-

IMG_7817

IMG_7817

-

IMG_7818

IMG_7818

-

IMG_7819

IMG_7819

-

IMG_7820

IMG_7820

-

IMG_7821

IMG_7821

-

IMG_7822

IMG_7822

-

IMG_7823

IMG_7823

-

IMG_7824

IMG_7824

-

IMG_7825

IMG_7825

-

IMG_7826

IMG_7826

-

IMG_7827

IMG_7827

-

IMG_7828

IMG_7828

-

IMG_7829

IMG_7829

-

IMG_7830

IMG_7830

-

IMG_7831

IMG_7831

-

IMG_7832

IMG_7832

-

IMG_7833

IMG_7833

-

IMG_7834

IMG_7834

-

IMG_7835

IMG_7835

-

IMG_7836

IMG_7836

-

IMG_7837

IMG_7837

-

IMG_7838

IMG_7838

-

IMG_7839

IMG_7839

-

IMG_7840

IMG_7840

-

IMG_7841

IMG_7841

-

IMG_7842

IMG_7842

-

IMG_7843

IMG_7843

-

IMG_7844

IMG_7844

-

IMG_7845

IMG_7845

-

IMG_7846

IMG_7846

-

IMG_7847

IMG_7847